Overview

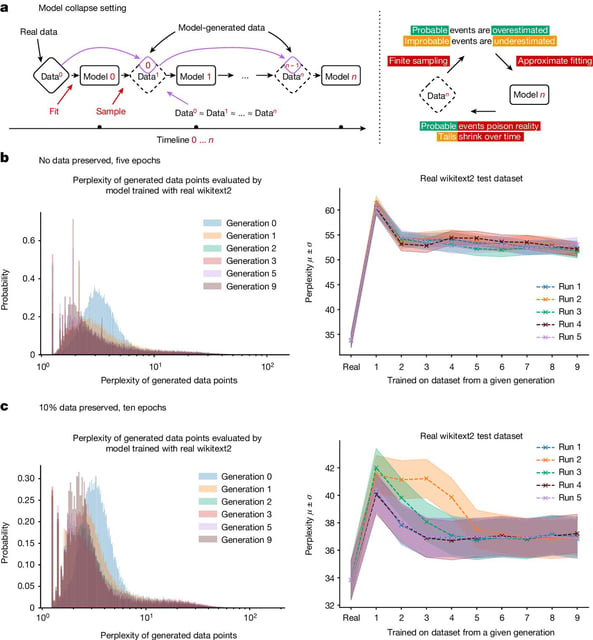

- Studies show AI models trained on AI-generated data degrade quickly, losing original context and producing gibberish.

- Researchers call this phenomenon 'model collapse,' which can occur within just a few generations of recursive training.

- The issue arises as AI-generated content proliferates online, reducing the availability of original human-generated data.

- Proposed solutions include watermarking AI-generated content and stricter vetting of training datasets.

- Tech firms may need to rely more on human-generated content to maintain model accuracy and diversity.