Overview

- 94% of AI-generated exam submissions were not identified as such by human markers.

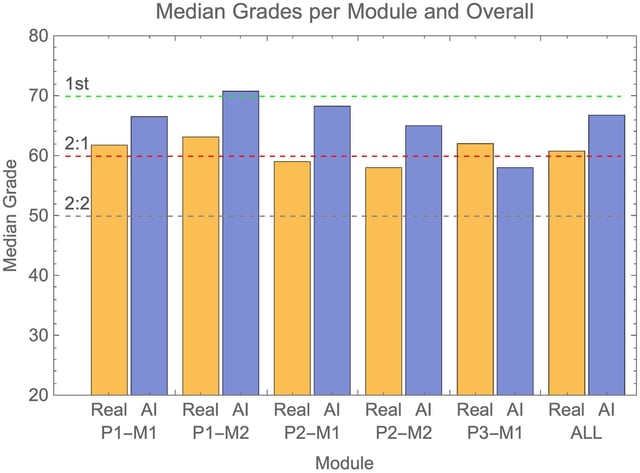

- AI-written answers scored higher than real student submissions in over 83% of cases.

- Study highlights challenges in maintaining academic integrity with the rise of generative AI.

- Researchers suggest educational institutions must adapt to the evolving presence of AI.

- Calls for revising assessment methods to address AI's impact on academic evaluations.