Overview

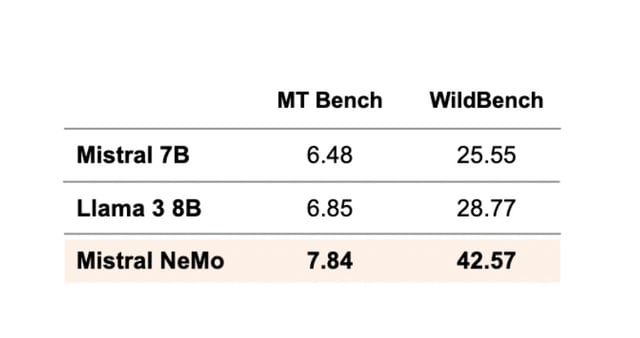

- Mistral NeMo features a 128,000-token context window, enhancing its ability to process large amounts of data.

- The model supports multiple languages, including English, French, German, Spanish, and more.

- Its FP-8 data format allows for high performance and portability, making it suitable for various enterprise applications.

- Developed in collaboration with Nvidia, the model can run efficiently on cost-effective hardware like RTX GPUs.

- Mistral NeMo is available under the Apache 2.0 license, facilitating widespread adoption and commercial use.