Overview

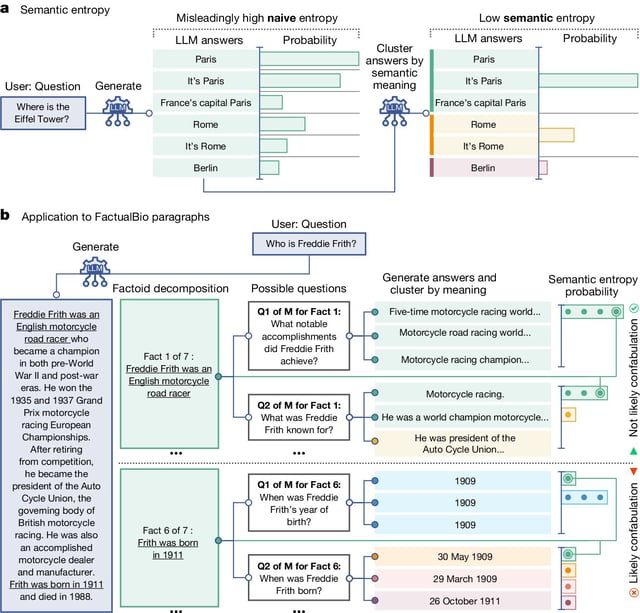

- The method distinguishes between factual uncertainty and phrasing uncertainty in AI responses.

- It calculates 'semantic entropy' to measure the consistency of generated answers.

- The technique outperforms previous methods in detecting AI errors across various datasets.

- Although computationally intensive, it enhances AI reliability in high-stakes applications.

- Experts caution that while promising, the method doesn't address all types of AI errors.